Paramount+ on Multi-region.

Shiva Paranandi

October 19, 2023

The Mission

Each week, millions of subscribers all around the world come to Paramount+ to stream live UEFA soccer matches or their favorite movies or shows. To keep them engaged with the service, the experience needs to be flawless, pristine and of broadcast quality. This is in addition to many other major live streamed sports matches and other events.

Getting to this point required our team to think differently about how we would deliver live experiences on a massive scale to a global audience. In this post I will walk you through our journey to achieving this global scale for our service and give you a view into where we are headed from here.

Entertaining the Planet

As the fastest growing streaming service in the US, our user base is increasing and there is a lot of interest in consuming more content which may include both scheduled programming or live events. The traffic patterns between the two are vastly different. The users of live events would like to get to the event as quickly as possible, whereas the users of scheduled events are curious about what else is out there. This means that we need to build and scale for both scenarios.

With Paramount+ available in 45 markets globally, the increase in the number of live events also means we need to make changes under high load scenarios. The luxury of a maintenance window got thrown out of the window.

Beginnings

The road to multi-region has grown steadily over the years as audiences increasingly become cable cutters or cable nevers, turning to streaming services for entertainment, news and sports. As our audience grew substantially in 2020 and 2021 (when Paramount+ was named the fastest growing brand in the United States), the switch to streaming services, viewership of Paramount+ increased dramatically. Add to this some of the large scale events that Paramount+ climbed to new heights in terms of daily active users.

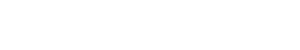

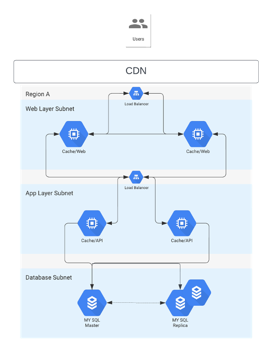

As Paramount+ embarked on finding a way to add scale to our growth, we realized a few things about our infrastructure. A lot of our services were stateless and horizontally scalable. However these tiers were limited to a single region within Google Cloud. While we could scale these tiers to a different region, we found that there was one particular issue with a backend infrastructure layer. This was the database layer. We had been on MySQL in the past, which traditionally allows for a single master as far as database writes are concerned. There are methods of circumventing this, however, the solutions require significant investment in the database tier and the data layer tiers to shard the data. This posed our greatest challenge when trying to scale to more than a single region.

The Paramount+ application was already in multiple zones when we moved from the datacenter to the cloud back in 2019. However, moving to a different region in an active-active configuration was going to be the real test.

Before embarking on finding a solution to the database we needed to set some baseline conditions on multi-region:

● There should be zero downtime during the migration process.

● No region should directly need to talk to another region except in the cases of replication.

● End users should not have to worry about which region they are accessing. This also means the regions should be as homogeneous as possible. We would always have exceptions around some background jobs or corner cases. However, all flows that directly serve end users should be scalable across multiple regions.

● All regions should be equally secure, audited and monitored in the same way.

Besides our requirement for zero downtime and no planned outages we also wanted to make sure that there was zero data loss when moving from one database system to the other. To enable this we took a few key steps. We made sure that the code base hitting the database was compatible with our old and new databases. This was tricky as tuning the queries for two databases is not only complicated but very time consuming as one database had a single master whereas the newer database was spread out across multiple regions. For now suffice it to say that we also had to update the way we were using our current database.

Paramount+ has many properties under its umbrella. One of the goals we established was to take the smallest amongst them and move it to a multi-region setup so that we could perfect the process and implement it widely.

Thankfully our engineering teams had a very good fundamental principle of building stateless, horizontally scalable application tiers. This allowed the Infrastructure and SRE teams to focus more on the database and the ORM layers than worry about all the tiers above.

Migration

At this point the biggest challenge was our database. Paramount+ was hosted on a single master (aka read/write) database. A single vertically scaled master database can only carry us forward so far. While the team considered sharding the data and spreading it out, our past experience taught us that this would be a laborious process. We started looking for a new multi-master capable database with the criteria that we had to make sure that we stick to a relational database due to the existing nature of the application. This narrowed down the criteria and after some internal research and POC’s we narrowed down to a new player in the database space called Yugabyte (www.yugabyte.com).

Our next step was to figure out the minimum-viable-product (MVP) and the success criteria all the way from the proof of concept (POC) to launching the property in a multi-region set up. The criteria in the POC as always is straight forward. This was related to the items around scalability, reliability, latency etc. When it came to the integration we had a few strict goals in place. The most important was a strict zero outage database switch. This was important as Paramount+ had a lot of users and was a truly 24/7 streaming service.

● ORM Compatibility - The ORM layer should be compatible with both the databases. This will help us to quickly switch between the databases. While this is easier said than done, it is not impossible. There were some column types like sequences, booleans etc that were different between our current database and the target database. We had to retrofit the usage of autoincrement to sequences in our existing database to help with the migration.

● CDC (Change Data Capture) - CDC is a one-way mechanism to keep databases in sync. We set up a 2 way continuous data sync between our databases.

● Traffic replay - Performance environment that would simulate production traffic on an internal environment using test user data. Getting this right was extremely important. On top of this we also built the ability to replay production SQL traffic without the need for copying over user data.

Once we met the criteria for the above, we started setting up production using the CDC above. The CDC is a slow process and takes time to catch up. We also set up a ton of monitoring and alerting around data incompatibilities.

Once the CDC fully caught up we picked a date and started the switch over. While this blog makes it sound easy there were many nerve wracking moments during the data migration and the switch over. There were a lot of expectations to manage on this new piece of infrastructure.

We essentially have the capability to enable multiple regions. We went from a single region in Google to

Having the ability to enable multiple regions in a short span of time.

Results

Post launch of this new multi-master database and multi-region environments we have had incredible success at hosting many large scale events from major award shows to huge sports matches. These events combined with the robust architecture and infrastructure give us more confidence to host the growing needs of our users to consume the amazing content line up that Paramount+ has.

During this journey we also learned a few key lessons. The most important skills are patience, tenacity and the innovation mindset that allows us to think outside of the box. These qualities are the difference between a successful project and a failure. Thankfully the team here at Paramount+ have these in abundance.

Testing is key. Don’t rest till you are completely satisfied with the results. Compromising on the test results can bite us and wreak havoc when you least expect it. For a project of this size it is always good to plan for failures and alternate paths. The road to coming back to the starting point needs to be smooth, easy and without too many glitches. This way the stress of fixing forward only goes away.

In all it was a great experience and we learned many details in this journey that will help Paramount+ and the team’s growth in the future.